Jiajun Fan

I am a Computer Science Ph.D. student at UIUC. My research focuses on autonomous RL post-training for large generative models — making diffusion/flow models and multi-modal reasoning LLMs continuously self-improve with less and less human intervention. Previously, I pushed RL to superhuman performance: breaking 24 Atari world records and outperforming Agent57 with 500× less data.

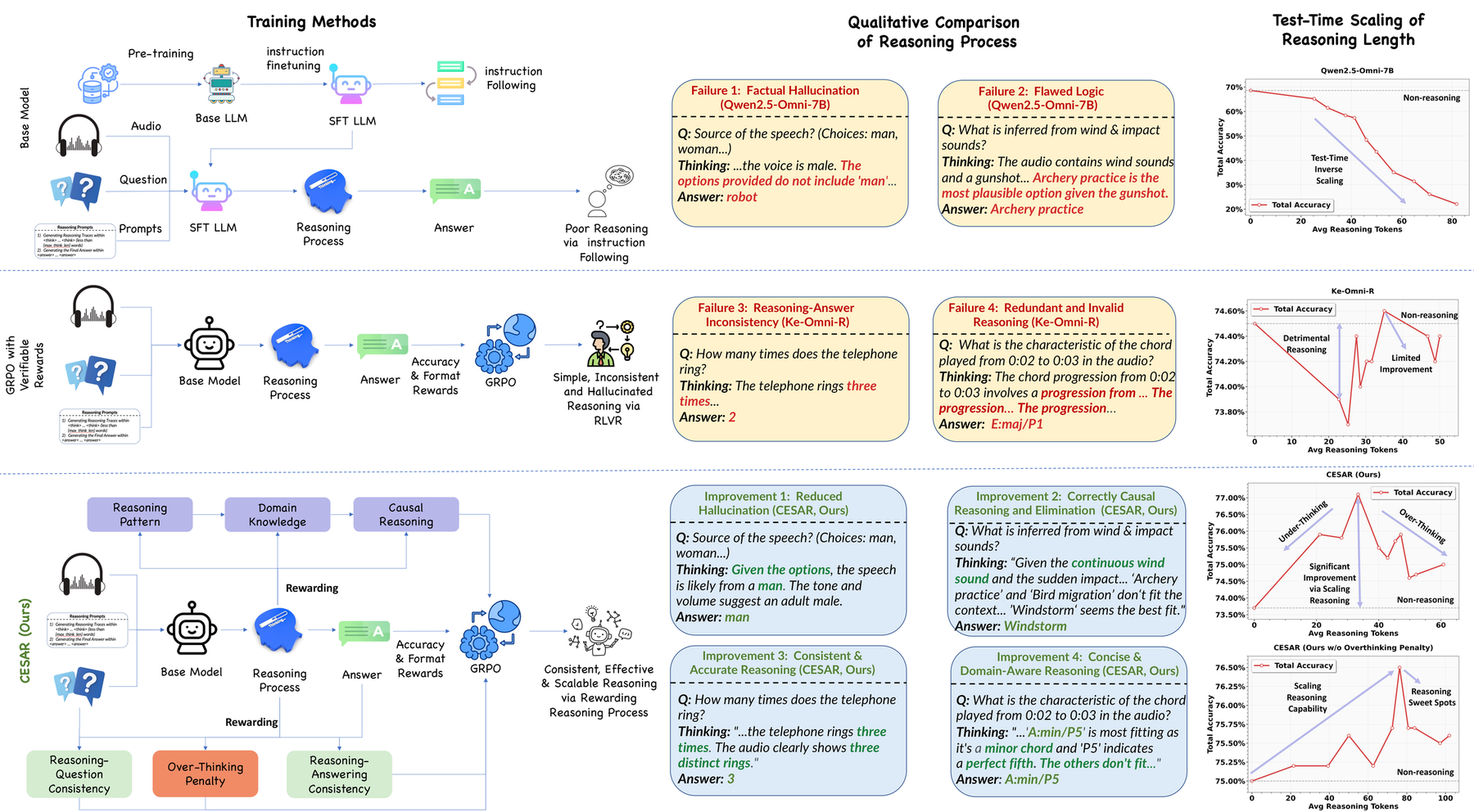

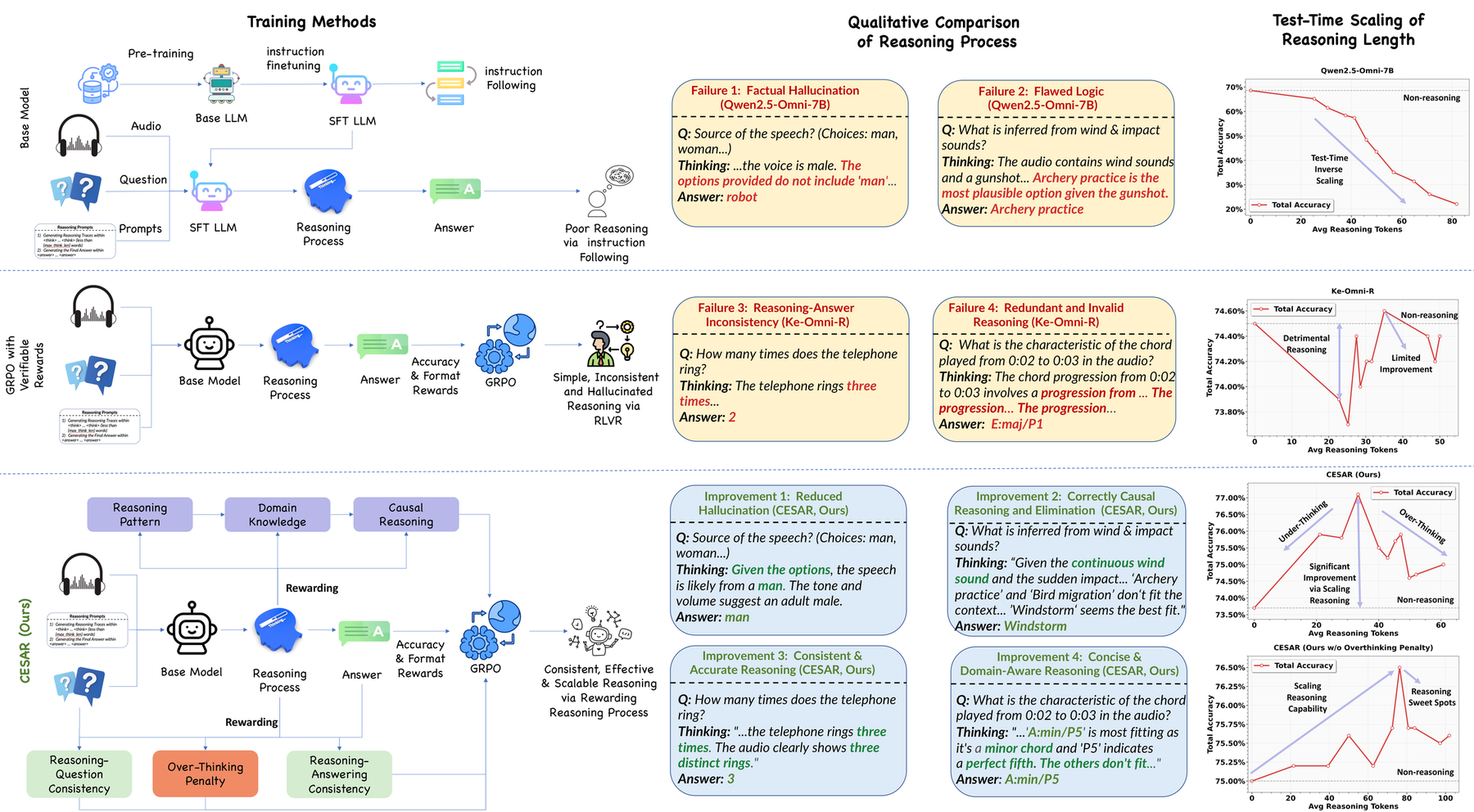

- Jan 2026 Accept2 papers at ICLR 2026 — CESAR & SP-VLA. See you in Rio 🇧🇷

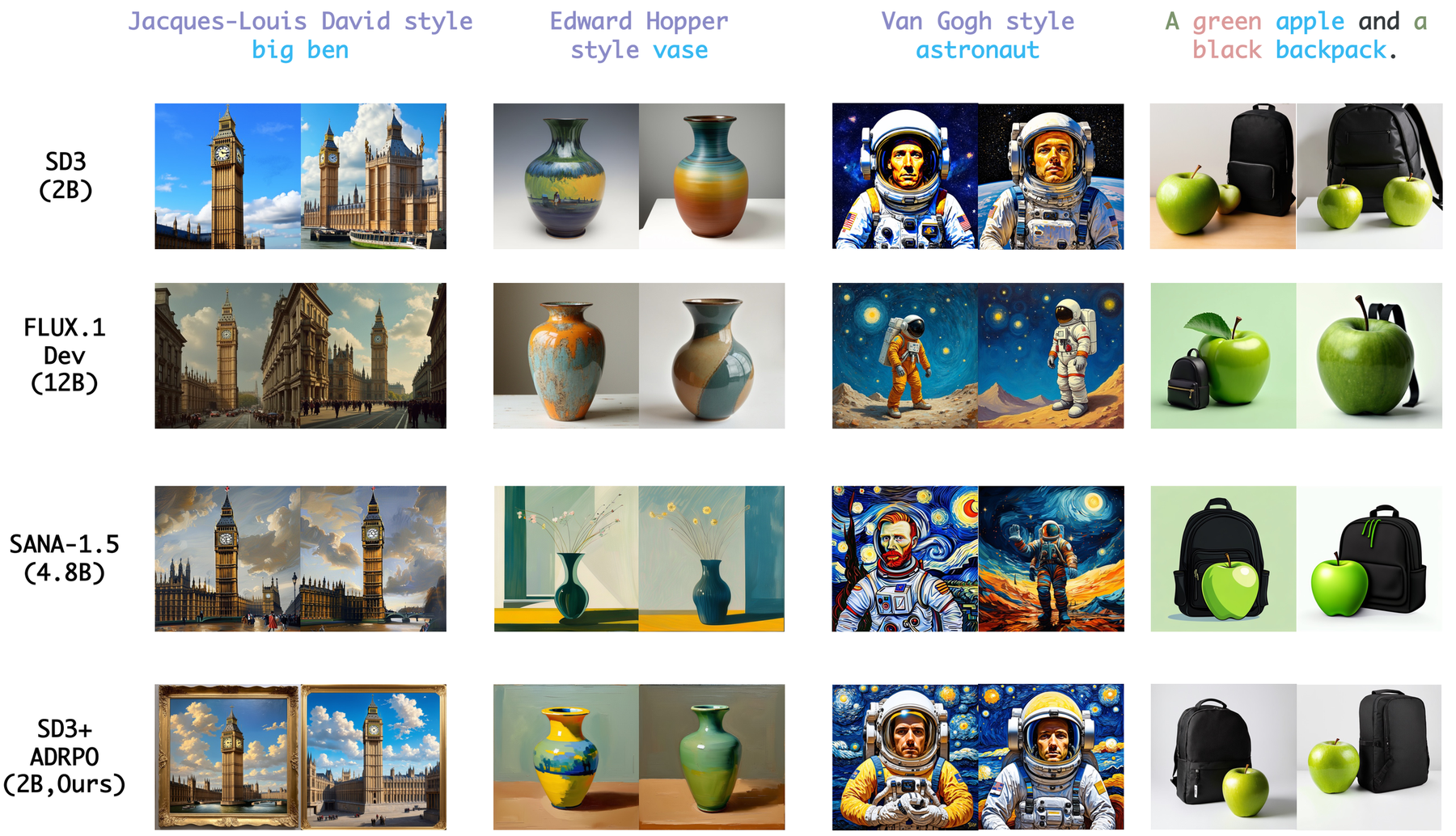

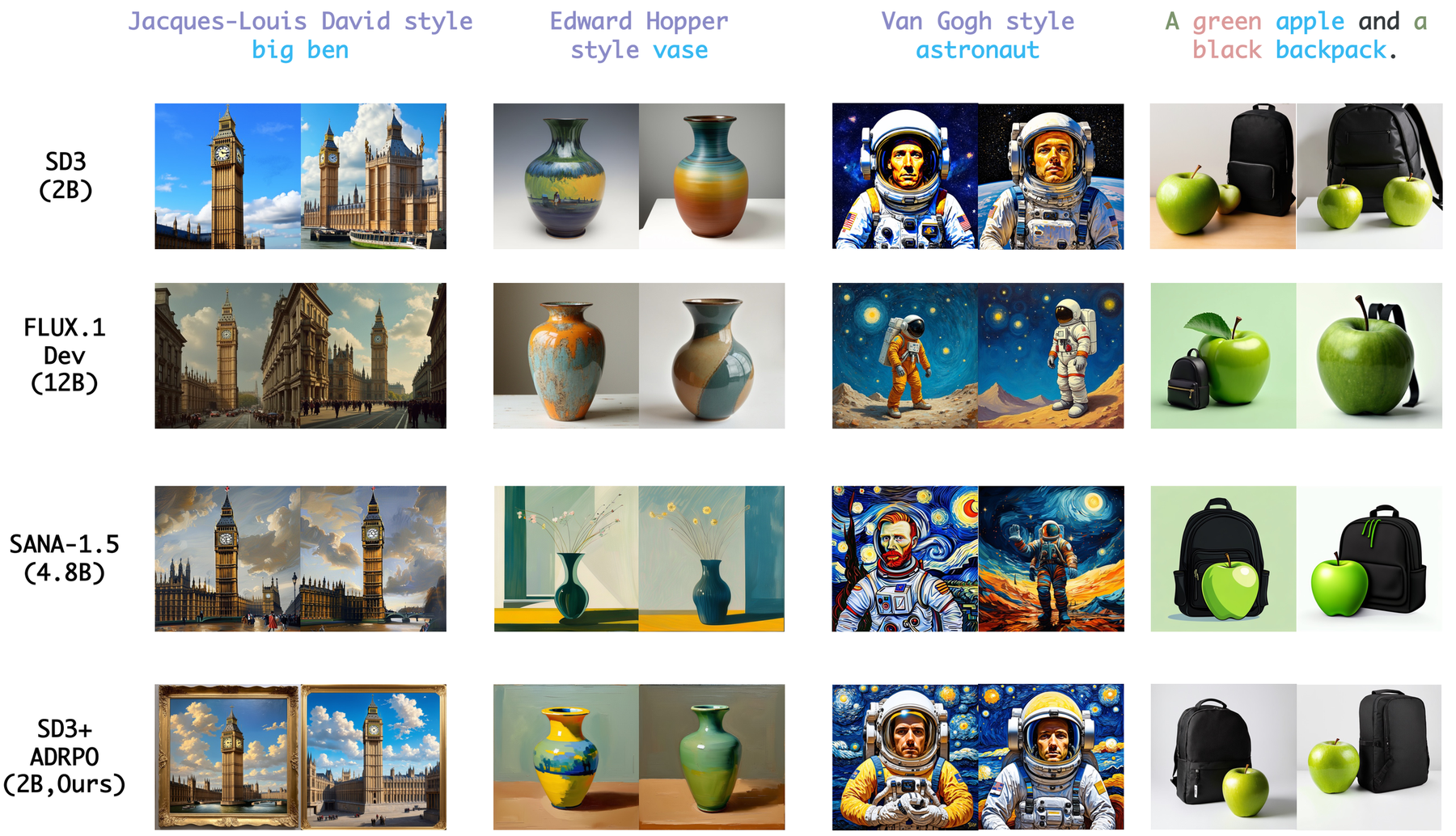

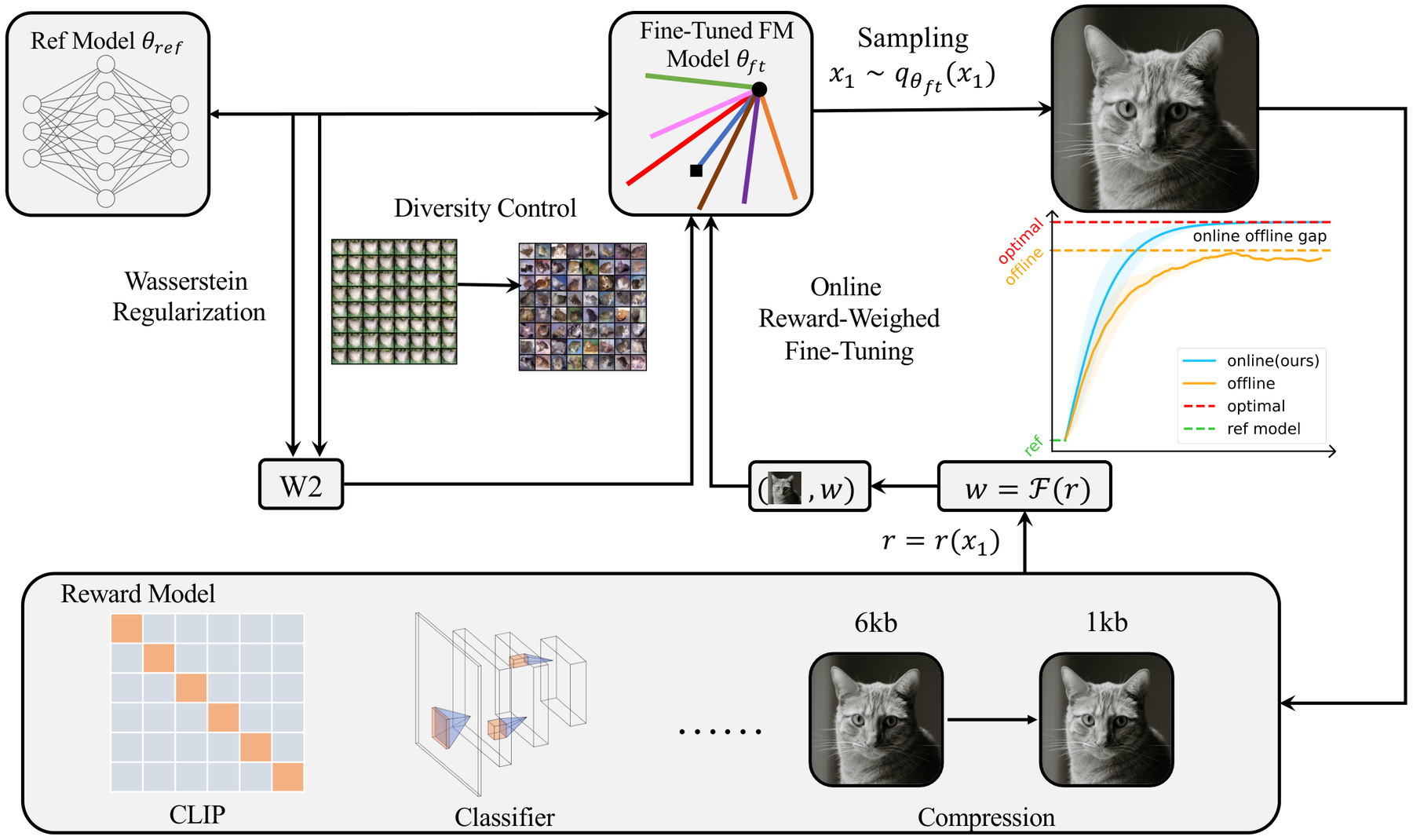

- Sep 2025 Accept2 papers at NeurIPS 2025 — ADRPO & VarCon. See you in San Diego 🌊

- Jun 2025 AcceptPaper accepted at IEEE TPAMI: PRANCE.

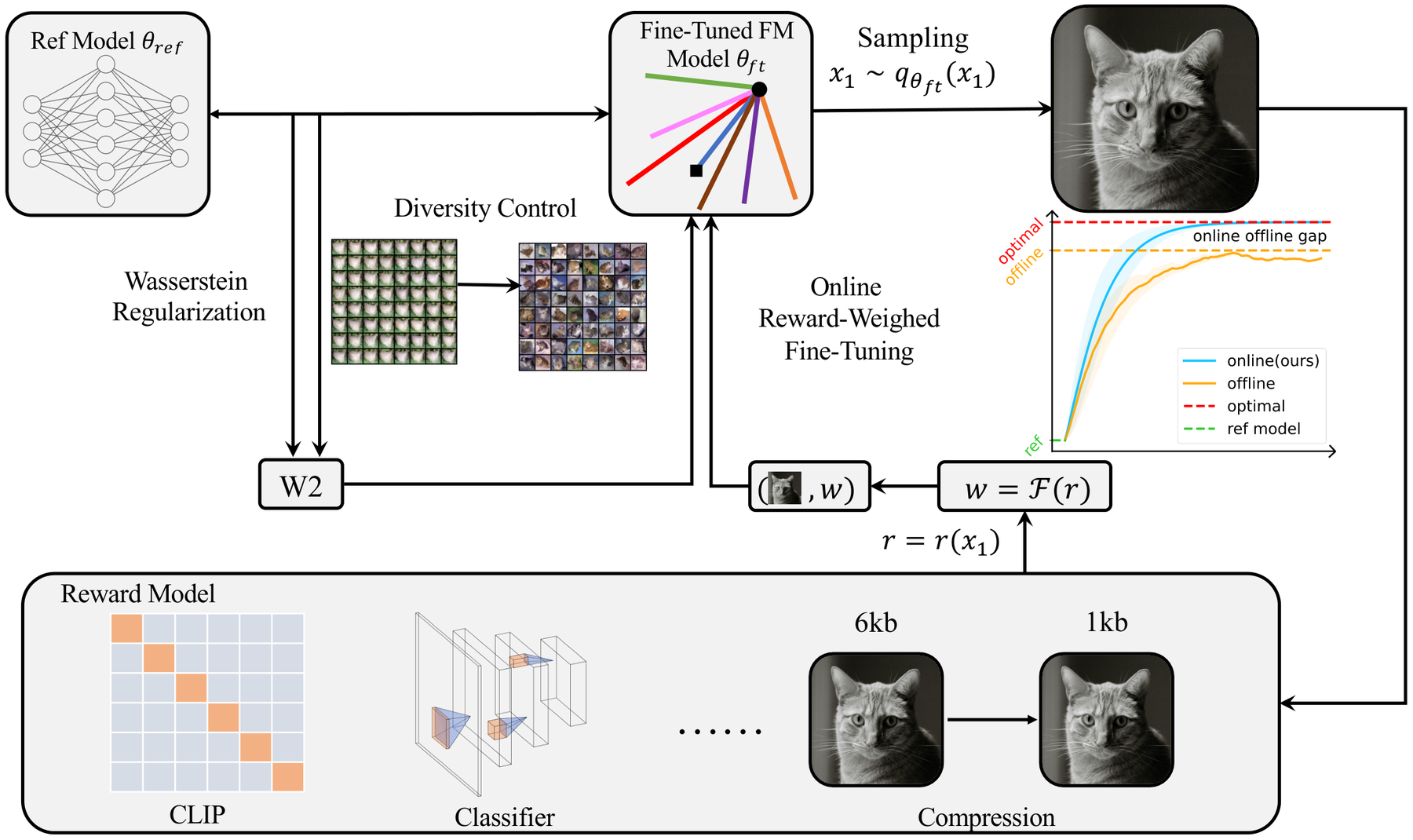

- Feb 2025 AcceptPaper accepted at ICLR 2025: ORW-CFM-W2 (Flow Matching self-evolution).

- Jan 2025 ServiceReviewer: ICLR 2025, NeurIPS 2024, CVPR 2026, AAAI 2025, AISTATS 2025.

- Aug 2024 🎓 Started Ph.D. at UIUC CS (GPA 4.0/4.0).

- Jan 2023 Oral · Top 5%LBC at ICLR 2023, ranked 5/4176 — broke 24 Atari world records.

* = first/co-first author · Full list on Google Scholar / Publications page

Oral2023

ICLR · NeurIPS · ICML · TPAMI

broken by LBC (ICLR'23 Oral)

than Agent57

Beats Gemini 2.5 Pro

Computer Science

Making AI Systems That Improve Themselves

Today's AI is frozen after training. I work to change that: AI that never stops getting better, with progressively less human scaffolding.

🎖 Selected Awards

- National Scholarship ×2, Top 1% — Nankai Univ.

- Ranked 1st / 83 in major — Nankai Univ.

- Outstanding Graduates (Top 1%) — Nankai Univ.

- Tang Lixin Scholarship (Top 1%)

- GPA 4.0/4.0 — UIUC Ph.D.

- GPA 3.97/4.0, Top 1.3% — Tsinghua M.Eng.

🔍 Reviewer

- ICLR 2024 · 2025 · 2026

- NeurIPS 2022–2024 · 2025

- ICML 2023–2024 · 2025 · 2026

- CVPR 2026

- AAAI 2025 · AISTATS 2025 · KDD 2024

Happy to discuss research, internships, or collaborations. Best reached by email.

📧 jiajunf3@illinois.edu · 🏛 Siebel Center for CS, UIUC · CV (PDF)